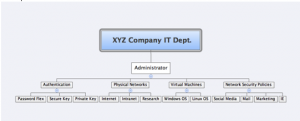

In order to know what your organization needs for security, you first need to define what you have. Many times, this task of defining and organizing can be intimidating, especially if it has been a long time since someone did it. However, with a mind mapping tool, such as Inspiration or the free tool, XMind – pulling together your assets will come together quickly.

It is important to define a “Who, What, Where” when assessing your environment. Who has access? What programs are running and on which machines are they running? Where does the data reside that could be compromised? How is the environment secured?

Creating a map will allow you to easily follow relationships so you will then be able to assign tasks accordingly. Also, when you create a map, it will visibly reveal relationships that previously were unseen or unnoticed.

As the various network relationships are mapped out, it will be easier to see what would be affected in your enterprise should a data breach occur.

If Server A is compromised, incident responders can quickly assess what other components may have been affected by reviewing its trust relationships. Having a clear depiction of component dependencies eases the re-architecture process allowing for faster, more efficient upgrades. Creating a physical map in accordance with data flow and trust relationships ensures that components are not forgotten.

Finally, categorizing system functions eases the enslaving process. So mind map your way to security and reach your destination of a safer enterprise.