One of the least understood parts of MicroSolved is how the HoneyPoint Internet Threat Monitoring Environment (#HITME) data is used to better protect our customers.

One of the least understood parts of MicroSolved is how the HoneyPoint Internet Threat Monitoring Environment (#HITME) data is used to better protect our customers.

If you don’t know about the #HITME, it is a set of deployed HoneyPoints that gather real world, real time attacker data from around the Internet. The sensors gather attack sources, frequency, targeting information, vulnerability patterns, exploits, malware and other crucial event data for the technical team at MSI to analyze. You can even follow the real time updates of attacker IPs and target ports on Twitter by following @honeypoint or the #HITME hash tag. MSI licenses the data under Creative Commons, non-commercial and FREE as a public service to the security community.

That said, how does the #HITME help MSI better protect their customers? First, it allows folks to use the #HITME feed of known attacker IPs in a blacklist to block known scanners at their borders. This prevents the scanning tools and malware probes from ever reaching you to start with.

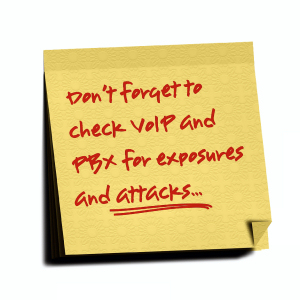

Next, the data from the #HITME is analyzed daily and the newest, bleeding edge attack signatures get added to the MSI assessment platform. That means that customers with ongoing assessments and vulnerability management services from MSI get continually tested against the most current forms of attack being used on the Internet. The #HITME data also gets updated into the MSI pen-testing and risk assessment methodologies, focusing our testing on real world attack patterns much more than vendors who rely on typical scanning tools and backdated threats from their last “yearly bootcamp”.

The #HITME data even flows back to the software vendors through a variety of means. MSI shares new attacks and possible vulnerabilities with the vendors, plus, open source projects targeted by attackers. Often MSI teaches those developers about the vulnerability, the possibilities for mitigation, and how to perform secure coding techniques like proper input validation. The data from the #HITME is used to provide the attack metrics and pattern information that MSI presents in its public speaking, the blog, and other educational efforts. Lastly, but certainly not least, MSI provides an ongoing alerting function for organizations whose machines are compromised. MSI contacts critical infrastructure organizations whose machines turn up in the #HITME data and works with them to mitigate the compromise and manage the threat. These data-centric services are provided, pro- bono, in 99% of all of the cases!

If your organization would be interested in donating an Internet facing system to the #HITME project to further these goals, please contact us. Our hope is that the next time you hear about the #HITME, you’ll get a smile on your face knowing that the members of our team are working hard day and night to protect MSI customers and the world at large. You can count on us, we’ve got your back!

We’re kicking off the week by talking about some of our favorite feeds on Twitter!

We’re kicking off the week by talking about some of our favorite feeds on Twitter!