Monitoring: A True Necessity

There is no easier way to shut down the interest of a network security or IT administrator than to say the word “monitoring”. You can just mention the word and their faces fall as if a rancid odor had suddenly entered the room! And I can’t say that I blame them. Most organizations do not recognize the true necessity of monitoring, and so do not provide proper budgeting and staffing for the function. As a result, already fully tasked (and often times inadequately prepared) IT or security personnel are tasked with the job. This not only leads to resentment, but also virtually guarantees that the job is will not be performed effectively.

And when I say human monitoring is necessary if you want to achieve any type of real information security, I mean it is NECESSARY! You can have network security appliances, third party firewall monitoring, anti-virus packages, email security software, and a host of other network security mechanisms in place and it will all be for naught if real (and properly trained) human beings are not monitoring the output. Why waste all the time, money and effort you have put into your information security program by not going that last step? It’s like building a high and impenetrable wall around a fortress but leaving the last ten percent of it unbuilt because it was just too much trouble! Here are a few tips for effective security monitoring:

- Properly illustrate the necessity for human monitoring to management, business and IT personnel; make them understand the urgency of the need. Make a logical case for the function. Tell them real-world stories about other organizations that have failed to monitor and the consequences that they suffered as a result. If you can’t accomplish this step, the rest will never fall in line.

- Ensure that personnel assigned to monitoring tasks of all kinds are properly trained in the function; make sure they know what to look for and how to deal with what they find.

- Automate the logging and monitoring function as much as possible. The process is difficult enough without having to perform tedious tasks that a machine or application can easily do.

- Ensure that you have log aggregation in place, and also ensure that other network security tool output is centralized and combined with logging data. Real world cyber-attacks are often very hard to spot. Correlating events from different tools and processes can make these attacks much more apparent.

- Ensure that all personnel associated with information security communicate with each other. It’s difficult to effectively detect and stop attacks if the right hand doesn’t know what the left hand is doing.

- Ensure that logging is turned on for everything on the network that is capable of it. Attacks often start on client-side machines.

- Don’t just monitor technical outputs from machines and programs, monitor access rights and the overall security program as well:

- Monitor access accounts of all kinds on a regular basis (at least every 90 days is recommended). Ensure that user accounts are current and that users are only allocated access rights on the system that they need to perform their jobs. Ensure that you monitor third party access to the system to this same level.

- Pay special attention to administrative level accounts. Restrict administrative access to as few personnel as possible. Configure the system to notify proper security and IT personnel when a new administrative account is added to the network. This could be a sign that a hack is in progress.

- Regularly monitor policies and procedures to ensure that they are effective and meet the security goals of the organization. I recommend doing this as a regular part of business continuity testing and review.

Sunshine on a “cloudy” day…

I recently saw an article targeted at non-profits that was a bit frightening. The statement was that small non-profits, and by extension many businesses, could benefit from the ease of deployment of cloud services. The writers presented AWS, Dropbox, DocuSign, et. al. as a great way to increase your infrastructure with very little staff.

While the writers were not wrong….they were not entirely correct, either. It’s incredibly easy and can be cost effective to use a cloud based infrastructure. However, when things go wrong, they can go REALLY wrong. In February of 2018, Fedex had a misconfigured S3 bucket that exposed a preponderance of customer data. That’s simply the first of many notable breaches that have occurred so far in 2018, and the list grows as you travel back in time. Accenture, Time Warner and Uber are a few of the big names with AWS security issues in 2017.

So, if the big guys who have a staff can’t get it right, what can you do? A few things to consider:

- What, specifically, are you deploying to the cloud? A static website carries less business risk than an application that contains or transfers client data.

- What are the risks associated with the cloud deployment? Type of data, does it contain PII, etc.? What is the business impact if this data were to be compromised?

- Are there any regulatory guidelines for your industry that could affect cloud deployment of data?

- Have you done your due diligence on cloud security in general? The Cloud Security Alliance has a lot of good resources available for best practices. Adam from MSI wrote a good article on some of the permissions issues recently, as well.

- What resources do you have or can you leverage to make sure that your deployment is secure? If you don’t have internal resources, consider leveraging an external resource like MSI to assist.

Remember – just because you can, doesn’t always mean you should. But cloud infrastructure can be a great resource if you handle it properly.

Questions, comments? I’d love to hear from you. I can be reached at lwallace@microsolved.com, or on Twitter @TheTokenFemale.

It’s OK to save all my passwords in Word, no?

In a follow up to my last blog on password managers, many of my family members and friends have still not picked up on the habit of using them – mostly because of the refused acceptance that there is a small price to pay for increased security; the price being a couple clicks to bring up the password manager app to look up the password for a web site login.

Password managers are still what is recommended to store unique and complex passwords for each authentication/login credentials you may have each domain, computer, web site. But for those family members and friends who have refused to adopt a password manager, I recommend the following.

Instead of saving your passwords in a Word document, save your password hints. We’ll get to how to write these hints later. Save the Word document encrypted, and make the filename something less obvious than passwords.docx – maybe “Favorite Movies.docx.” Depending on PC/Mac version, the encryption feature is found in the Save process under Tools or Options, Security. This is one password you need to remember – make it a good and memorable one.

You may think, Word encryption is not the greatest. True. But this layer of security – encrypting the document with a non-enticing file name – at least keeps the nosey, non-hacker person who might come across your file.

As to what information to keep in the file, you don’t want to store your passwords as-is (in plaintext) in a Word document, even though the file may be encrypted. You should only store information that can help you remember the password. For example, if your passwords are famous movie quotes, to remember the credentials for 2 sites could be saved as:

ebay.com = Clint Eastwood

amazon.com = Jack Nicholson

Each of the above actors has a famous, iconic movie line, and using a consistent transformation method on those quotes, the actual passwords for the sites would be:

ebay.com = gOaheaDmakEmYdaY

amazon.com = yoUcanThandlEthEtrutH

In the above example, the actual passwords are formed by capitalizing the last character of each word in the password hint, and eliminating all spaces and special characters. This transformation process should be memorized and be consistent for all the passwords saved in the Word document.

You can choose whichever transformation process, as long as it’s consistent so you can remember and don’t have to write it down somewhere, eg. capitalize the last 2 characters of each word or capitalize the second character of each word or substitute every first character with a number.

This way, if someone gets a hold of your Word document, and manages to decrypt its password, they will only have a list of password hints, that only you can transform into the actual passwords.

Some other examples of passwords and password hints that you could use:

- Names of friends and family members, with their birth years as passwords, and the city where they live as password hints, eg.

- Save in the Word document your password hints:

ebay.com = Denver, COamazon.com = Chicago, IL- And from the above password hints, you know your best friend lives in Denver and your aunt in Chicago.

- Password for ebay.com =

JimmyJones1989 - Password for amazon.com =

GertrudeSmith1955

You could even do this:

- Save in the Word document the following password hints:

ebay.com = Go ahead. Make my day.amazon.com = You can't handle the truth!- And from the above password hints, use your memorized transformational process, eg capitalize the 2nd character of each word.

- Password for ebay.com =

gOaHeadmAkemYdAy - Password for amazon.com =

yOucAnthAndletHetRuth

This is “security through obscurity” – much of the information is available but in order for the information to be effective (for the passwords to work), they need to be manipulated using an algorithm that only the user knows but has committed to memory.

You can then email yourself this document. And when you need to look up the password for some site, look up the email, enter the document password to decrypt it, get the info, and use your memorized transformational process to re-construct your password for that site.

This is better than nothing. Better than the alternative of using easy to remember (and crack), simple and the same passwords for all logins. Best to use a password manager, though.

Be safe…

Resources:

https://www.cnet.com/how-to/the-safe-way-to-write-down-your-passwords/

https://www.symantec.com/connect/articles/simplest-security-guide-better-password-practices

Let’s Talk… But First “Let’s Encrypt”!

One of the common findings security assessment services encounter when they evaluate the Internet presence of an organization are sites where login credentials travel over the Internet unencrypted.

So: HTTP not HTTPS.

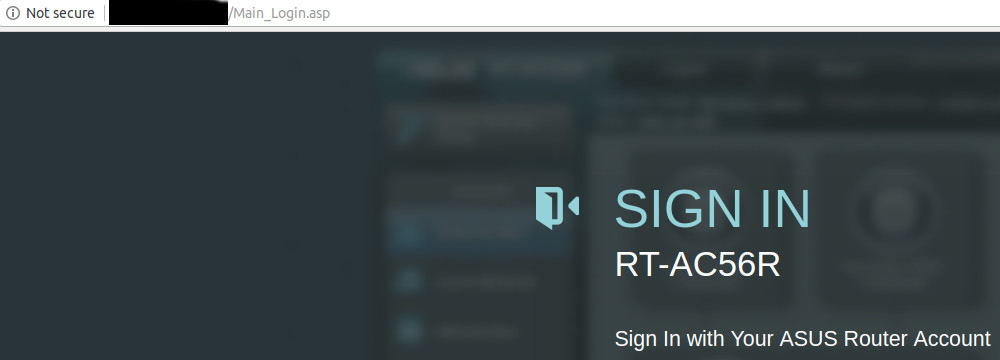

You’ve all seen the “not secure” warnings:

The intent of the browser’s warning is to make the user aware that there is a risk of credential loss with this “http-only” unencrypted login.

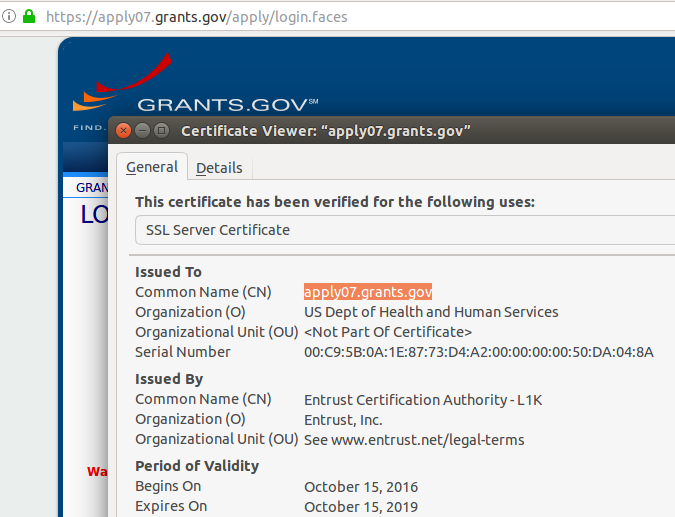

The solution is HTTPS of course, but that requires that a “certificate” be created, signed by some “certificate authority” (CA), and that the certificate be installed on the website.

If the user connects via HTTPS ( not HTTP ) the browser and the server negotiate an encrypted communication channel based on the SSL/TLS protocol and all is well…. almost.

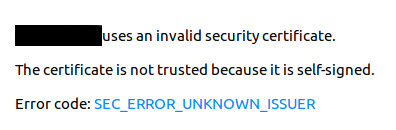

The traffic will be encrypted, but if the particular CA that created the website’s certificate is not itself known to the browser (and those trusts are either built-in or added by an IT department) the user may see something like the following:

The browser will give the user the option to trust the certificate and proceed – but this is universally regarded as a bad practice as it makes the end user more inclined to disregard all such security warnings and thus be more susceptible to phishing attacks that intentionally direct them to sites that are not what they claim to be.

The usual solution is to purchase certificates from a known and accepted CA (e.g. DigiCert, Comodo, GoDaddy, Entrust, etc.).

The CA’s that issue these certificates will perform some checks to ensure the requester is legitimate and that the domain is in fact actually controlled by the requester. The extent to which identity is verified and the breadth of coverage largely determines the cost – which can be anywhere from tens to thousands of dollars. SSLShopper provides some examples.

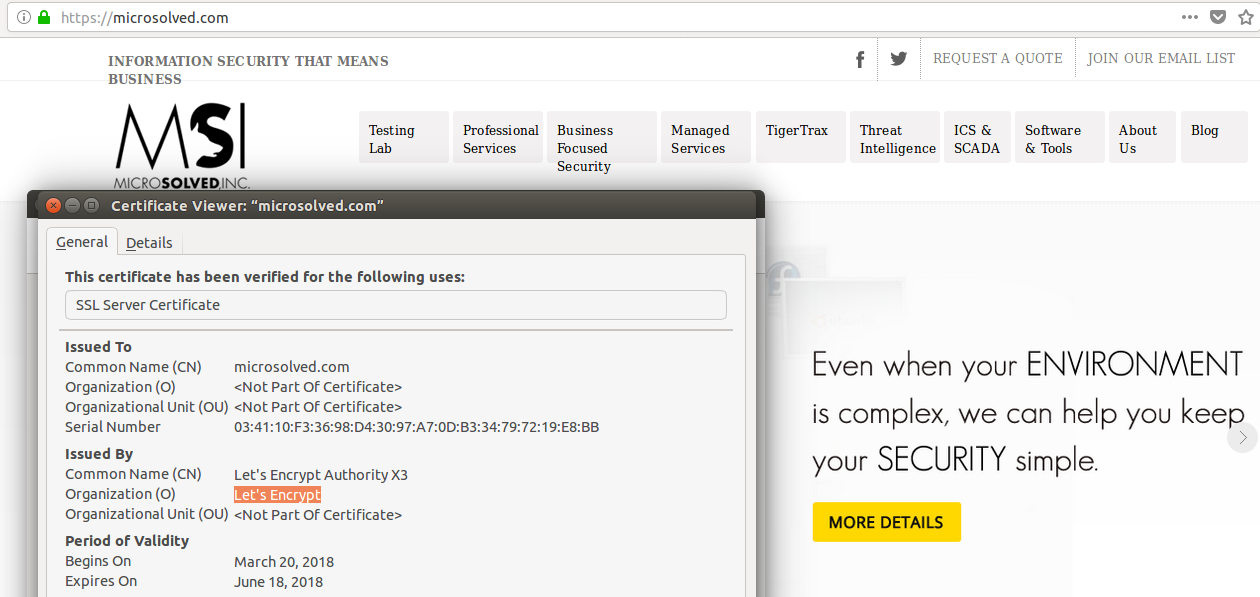

Users who browse to such sites will see the coveted “green lock” indicating an encrypted HTTPS connection to a validated website:

The problem is the cost and administrative delay associated with purchasing certificates from accepted CA’s, keeping track of those certificates, and ensuring they are renewed when they expire.

As a result some sites either dispense with HTTPS altogether or rely on “self-signed” certificates with the attendant risk.

This is where “Let’s Encrypt” comes in.

“Let’s Encrypt” is an effort lead by some of the major players in the industry to lower (read: eliminate) the cost of legitimate HTTPS communications and move the Internet as much as possible to HTTPS (and other SSL/TLS encrypted traffic) as the norm.

The service is entirely free and the administrative costs are minimal. Open-source software (Certbot) exists to automate installation and maintenance.

Quoting from the Certbot site:

“Certbot is an easy-to-use automatic client that fetches and deploys SSL/TLS certificates for your webserver. Certbot was developed by EFF and others as a client for Let’s Encrypt … Certbot will also work with any other CAs that support the ACME protocol.…

Certbot is part of EFF’s larger effort to encrypt the entire Internet. Websites need to use HTTPS to secure the web. Along with HTTPS Everywhere, Certbot aims to build a network that is more structurally private, safe, and protected against censorship”

The software allows “Let’s Encrypt” – acting as a CA – to dynamically validate that the requester has control of the domain associated with the website and issue the appropriate cert. The certificates are good for 90 days – but the entire process of revalidation can be easily automated.

The upshot is that all the barriers that have prevented universal adoption of HTTPS have largely been removed. The CA’s economic model is changing and some have left the business entirely.

Do you still have HTTP-only sites and are they a chronic finding in your security assessments?

Has cost and administrative overhead been a reason for that?

Take a look at “Let’s Encrypt” now!

One caveat:

Just because a site has a legitimate certificate and is using HTTPS does NOT mean it is necessarily safe, You may find your users are under the impression that is the case. As always, user education is a required part of your security program.

See:

HTTPS:

- https://en.wikipedia.org/wiki/HTTPS

- https://en.wikipedia.org/wiki/Public_key_certificate

- https://www.quora.com/What-is-certificate-authority-What-are-some-examples-of-them

- https://www.sslshopper.com/ – SSL costs

“Let’s Encrypt”:

- https://letsencrypt.org/

- https://chooseprivacyweek.org/resources/https-lets-encrypt/

- https://www.eff.org/encrypt-the-web

User Education:

Make Smart Devices Your Business Friend, Not Your Business Foe

One good way to improve your information security posture and save resources at the same time is to strictly limit the attack surfaces and attack vectors present on your network. It’s like having a wall with a thousand doors in it. The more of those doors you close off, the easier it is to guard the ones that remain. However, we collectively continue to let personnel use business assets and networks for high-risk activities such as web surfing, shopping, checking social media sites and a plethora of other activities that have nothing to do with business.

Most organizations to this day still allow their personnel to access the Internet at will, download and upload programs from there, employ computer ports like USB, etc. But the thing is, this is now; not ten years ago. Virtually everyone in the working world has a smart phone with them at all times. Why not just let folks use these devices for all their ancillary online activities and save the business systems for business purposes?

And for those employees and job types that truly need access to the Internet there are other protections you can employ. The best is to whitelist sites available to these personnel while ensuring that even this access is properly monitored. Another way is to stand up a separate network for approved Internet access with no (or strictly filtered) access to the production network. In addition, it is important to make sure employees use different passwords for business access and everything else; business passwords should only be used for that particular access alone.

Another attack vector that should be addressed is allowing employees local administration rights to their computers. Very few employees in most organizations actually need USB ports, DVD drives and the like to perform their business tasks. This goes double for the ability to upload and download applications to their computers. Any application code present on an organization’s production network should be authorized, approved and inventoried. Applications not on this list that are detected should be immediately researched and dealt with.

Imagine how limiting attacks vectors and surfaces in these ways would help ease the load on your system security and administrative personnel. It would give them much less to keep track of, and, consequently give them more time to properly deal with the pure business assets that remained.

Cloudy With a Chance of Misconfigurations

Many organizations have embraced cloud platforms now, like Amazon AWS or Microsoft Azure, whether they are using it for just a few services or moved part or all of their infrastructure there. No matter the service though, configuration isn’t foolproof and they all require specific knowledge to configure in a secure way.

In some cases we have seen these services configured in a way that isn’t best practice, which led to exposure of sensitive information, or compromises through services that should not have been exposed. In many instances there are at least some areas that can be hardened, or features enabled, to reduce risk and improve monitoring capabilities.

So, what should you be doing? We’ll take a look at Amazon AWS today, and some of the top issues.

One issue, that is seemingly pervasive, is inappropriate permissions on S3 buckets. Searches on S3 incidents will turn up numerous stories about companies exposing sensitive data due to improper configuration. How can you prevent that?

Firstly, when creating your buckets, consider your permissions very carefully. If you want to publicly share data from a bucket, consider granting ‘Everyone’ read permissions to the specific resources, instead of the entire bucket. Never allow the ‘Everyone’ group to have write permissions, on the bucket, or on individual resources. The ‘Everyone’ group applies literally to everyone, your employees and any attackers alike.

Secondly, take advantage of the logging capability of S3, and monitor the logs. This will help identify any inappropriately accessed resources, whether through inadvertently exposed buckets, or through misuse of authorization internally.

Another common issue is ports unnecessarily exposed on EC2 resources. This happens through misconfigurations in VPC NACLs or Security Groups, which act as a firewall, sometimes found configured with inbound traffic allowed to any port from any ip. NACLs and Security Groups should be configured to allow the least amount of traffic to the destination as possible, restricting by port and by ip. Don’t forget about restricting outbound traffic as well. For example, your database server probably only needs to talk to the web server and system update servers.

The last issue we’ll discuss today is the IAM, the Identity and Access Management interface. Firstly, you should be using IAM to configure users and access, instead of sharing the root account among everyone. Secondly, make sure IAM users and keys are configured correctly, with the least amount of privileges necessary for that particularly user. I also recommend requiring multifactor authentication, particularly on the root account, any users in the PowerUsers or Admins group, or any groups you have with similar permissions.

That’s all for today. And remember, the good news here is that you can configure these systems and services to be as secure as what is sitting on your local network.

Weekly Threat Brief 4/20

Starting today MSI will begin publishing our Weekly Threat Brief! This is a light compilation of cyber articles and highlights. Some articles have been broken down by verticals which include Financial, Business and Retail, Scada, Healthcare and Government/Military. Please take a moment to click on the link and read! Hope you enjoy it!

Please provide any feedback to info@microsolved.com

State Of Security Micro-Podcast Episode 14

This is my first foray into the world of Podcast! I am the new Chief Operating Officer here at MicroSolved and we are getting ready to launch a new Log Analysis product. This micro-episode features members of the staff at MSI and will talk through some of the highlights of our new offering. This is our first attempt as a team so be gentle as we will get better! Thanks for listening!

Business Impact Analysis: A Wealth of Information at Your Fingertips

Are you a Chief Information Security Officer or IT Manager stuck with the unenviable task of bringing your information security program into the 21st Century? Why not start the ball rolling with a business impact analysis (BIA)? It will provide you with a wealth of useful information, and it takes a good deal of the weight from your shoulders by involving personnel from every business department in the organization.

BIA is traditionally seen as part of the business continuity process. It helps organizations recognize and prioritize which information, hardware and personnel assets are crucial to the business so that proper planning for contingency situations can be undertaken. This is very useful in and of itself, and is indeed crucial for proper business continuity and disaster recovery planning. But what other information security tasks can BIA information help you with?

When MSI does a BIA, the first thing we do in issue a questionnaire to every business department in the organization. These questionnaires are completed by the “power users” in each department, who are typically the most experienced and knowledgeable personnel in the business. This means that not only do you get the most reliable information possible, but that one person or one small group is not burdened with doing all of the information gathering. Typical responses include (but are not limited to):

- A list of every business function each department undertakes

- All of the hardware assets needed to perform each business function

- All of the software assets needed to perform each business function

- Inputs needed to perform each business function and where they come from

- Outputs of each business function and where they are sent

- Personnel needed to perform each business function

- Knowledge and skills needed to perform each business function

So how does this knowledge help jumpstart your information security program as a whole? First, in order to properly protect information assets, you must know what you have and how it moves. In cutting-edge information security guidance, the first controls they recommend instituting are inventories of devices and software applications present on company networks. The BIA lists all of the hardware and software assets needed to perform each business function. So in effect you have your starting inventories. This not only tells you what you need, but is useful in exposing assets wasting time and effort on your network that are not necessary; if it’s not on the critical lists, you probably don’t need it.

In MSI’s own 80/20 Rule of Information Security, the first requirement is not only producing inventories of software and hardware assets, but mapping of data flows and trust relationships. The inputs and outputs listed by each business department include these data flows and trust relationships. All you have to do is compile them and put them into a graphical map. And I can tell you from experience; this is a great savings in time and effort. If you have ever tried to map data flows and trust relationships as a stand-alone task, you know what I mean!

Another security control a BIA can help you implement is network segmentation and enclaving. The MSI 80/20 Rule has network enclaving as their #6 control. The information from a good BIA makes it easy to see how assets are naturally grouped, and therefore makes it easy to see the best places to segment the network.

How about egress filtering? Egress filtering is widely recognized as one of the most effect security controls in preventing large scale data loss, and the most effective type of egress filtering employs white listing. White listing is typically much harder to tune and implement than black listing but is very much more effective. With the information a BIA provides you, it is much easier to construct a useful white list; you have what each department needs to perform each business function at your fingertips.

Then there is security and skill gap training. The BIA tells you what information users need to know to perform their jobs, so that helps you make sure that personnel are trained correctly and with enough depth to deal with contingency situations. Also, knowing where all your critical assets lie and how they move helps you make sure you provide the right people with the right kind of security training.

And there are other crucial information security mechanisms that a BIA can help you with. What about access control? Wouldn’t knowing the relative importance of assets and their nexus points help you structure AD more effectively? In addition, there is physical security to consider. Knowing where the most crucial information lies and what departments process it would help you set up internal secure areas and physical safeguards, wouldn’t it?

The upshot of all of this is that where information security is concerned, you can’t possibly know too much about how your business actually works. Ensure that you maintain detailed BIA and it will pay you back for the effort every time.