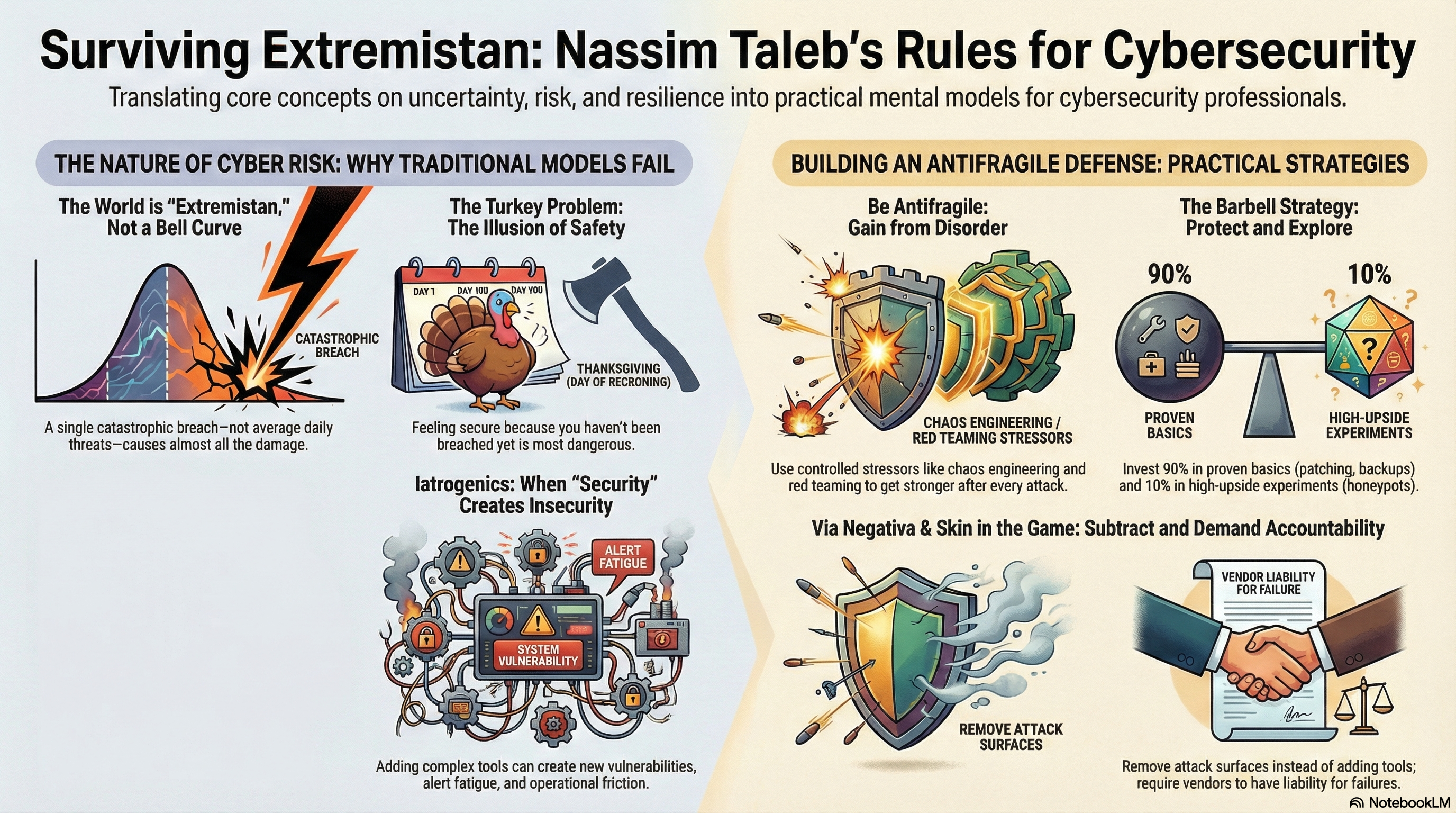

We’ve seen fraud evolve before. We’ve weathered phishing, credential stuffing, card skimming, and social engineering waves—but what’s coming next makes all of that look like amateur hour. According to Experian and recent security forecasting, we’re entering a new fraud era. One where AI-driven agents operate autonomously, build convincing synthetic identities at scale, and mount adaptive, shape-shifting attacks that traditional defenses can’t keep up with.

For small credit unions and community banks, this isn’t a hypothetical future—it’s an urgent call to action.

The Rise of Synthetic Realities

Criminals are early adopters of innovation. Always have been. But now, 80% of observed autonomous AI agent use in cyberattacks is originating from criminal groups. These aren’t script kiddies with GPT wrappers—these are fully autonomous fraud agents, built to execute entire attack chains from data harvesting to cash-out, all without human intervention.

They’re using the vast stores of breached personal data to forge synthetic identities that are indistinguishable from real customers. The result? Hyper-personalized phishing, credential takeovers, and fraudulent accounts that slip through onboarding and authentication checks like ghosts.

Worse yet, quantum computing is looming. And with it, the shift from “break encryption” to “harvest now, decrypt later” is already in motion. That means data stolen today—unencrypted or encrypted with current algorithms—could be compromised retroactively within a decade or less.

So what can small institutions do? You don’t have the budget of a multinational bank, but that doesn’t mean you’re defenseless.

Three Moves Every Credit Union Must Make Now

1. Harden Identity and Access Controls—Everywhere

This isn’t just about enforcing MFA anymore. It’s about enforcing phishing-resistant MFA. That means FIDO2, passkeys, hardware tokens—methods that don’t rely on SMS or email, which are easily phished or intercepted.

Also critical: rethink your workflows around high-risk actions. Wire transfers, account takeovers, login recovery flows—all of these should have multi-layered checks that include risk scoring, device fingerprinting, and behavioral cues.

And don’t stop at customers. Internal systems used by staff and contractors are equally vulnerable. Compromising a teller or loan officer’s account could give attackers access to systems that trust them implicitly.

2. Tune Your Own Data for AI-Driven Defense

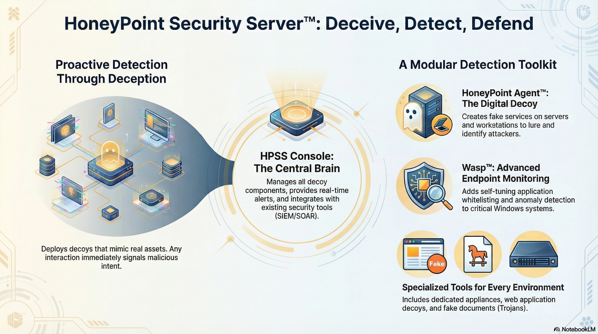

You don’t need a seven-figure fraud platform to start detecting anomalies. Use what you already have: login logs, device info, transaction patterns, location data. There are open-source and affordable ML tools that can help you baseline normal activity and alert on deviations.

But even better—don’t fight alone. Join information-sharing networks like FS-ISAC, InfraGard, or sector-specific fraud intel circles. The earlier you see a new AI phishing campaign or evolving shape-shifting malware variant, the better chance you have to stop it before it hits your members.

3. Start Your “Future Threats” Roadmap Today

You can’t wait until quantum breaks RSA to think about your crypto. Inventory your “crown jewel” data—SSNs, account histories, loan documents—and start classifying which of that needs to be protected even after it’s been stolen. Because if attackers are harvesting now to decrypt later, you’re already in the game whether you like it or not.

At the same time, tabletop exercises should evolve. No more pretending ransomware is the worst-case. Simulate a synthetic ID scam that drains multiple accounts. Roleplay a deepfake CEO fraud call to your CFO. Put AI-enabled fraud on the whiteboard and walk your board through the response.

Final Thoughts: Small Can Still Mean Resilient

Small institutions often pride themselves on their close member relationships and nimbleness. That’s a strength. You can spot strange behavior sooner. You can move faster than a big bank on policy changes. And you can build security into your culture—where it belongs.

But you must act deliberately. AI isn’t waiting, and quantum isn’t slowing down. The criminals have already adapted. It’s our turn.

Let’s not be the last to see the fraud that’s already here.

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.