If you’re running a SOC in a 1,000–20,000 employee organization, you don’t have an alert problem.

You have an alert economics problem.

When I talk to CISOs and SOC Directors operating hybrid environments with SIEM and SOAR already deployed, the numbers are depressingly consistent:

-

10,000–100,000 alerts per day

-

MTTR under scrutiny

-

Containment time tracked weekly

-

Analyst attrition quietly rising

-

Budget flat (or worse)

And then the question:

“How do we handle more alerts without missing the big one?”

Wrong question.

The right question is:

“Which alerts should not exist?”

This article is a practical, defensible way to reduce alert volume by 40–60% (directionally, based on industry norms) without increasing breach risk. It assumes a hybrid cloud environment with a functioning SIEM and SOAR platform already in place.

This is not theory. This is operating discipline.

First: Define “Without Increasing Breach Risk”

Before you touch a rule, define your safety boundary.

For this exercise, “no increased breach risk” means:

-

No statistically meaningful increase in missed high-severity incidents

-

No degradation in detection of your top-impact scenarios

-

No silent blind spots introduced by automation

That implies instrumentation.

You will track:

Leading metrics

-

Alerts per analyst per shift

-

% alerts auto-enriched before triage

-

Escalation rate (alert → case)

-

Median time-to-triage

Lagging metrics

-

MTTR

-

Incident containment time

-

Confirmed incident miss rate (via backtesting + sampling)

If you can’t measure signal quality, you will default back to counting volume.

And volume is the wrong KPI.

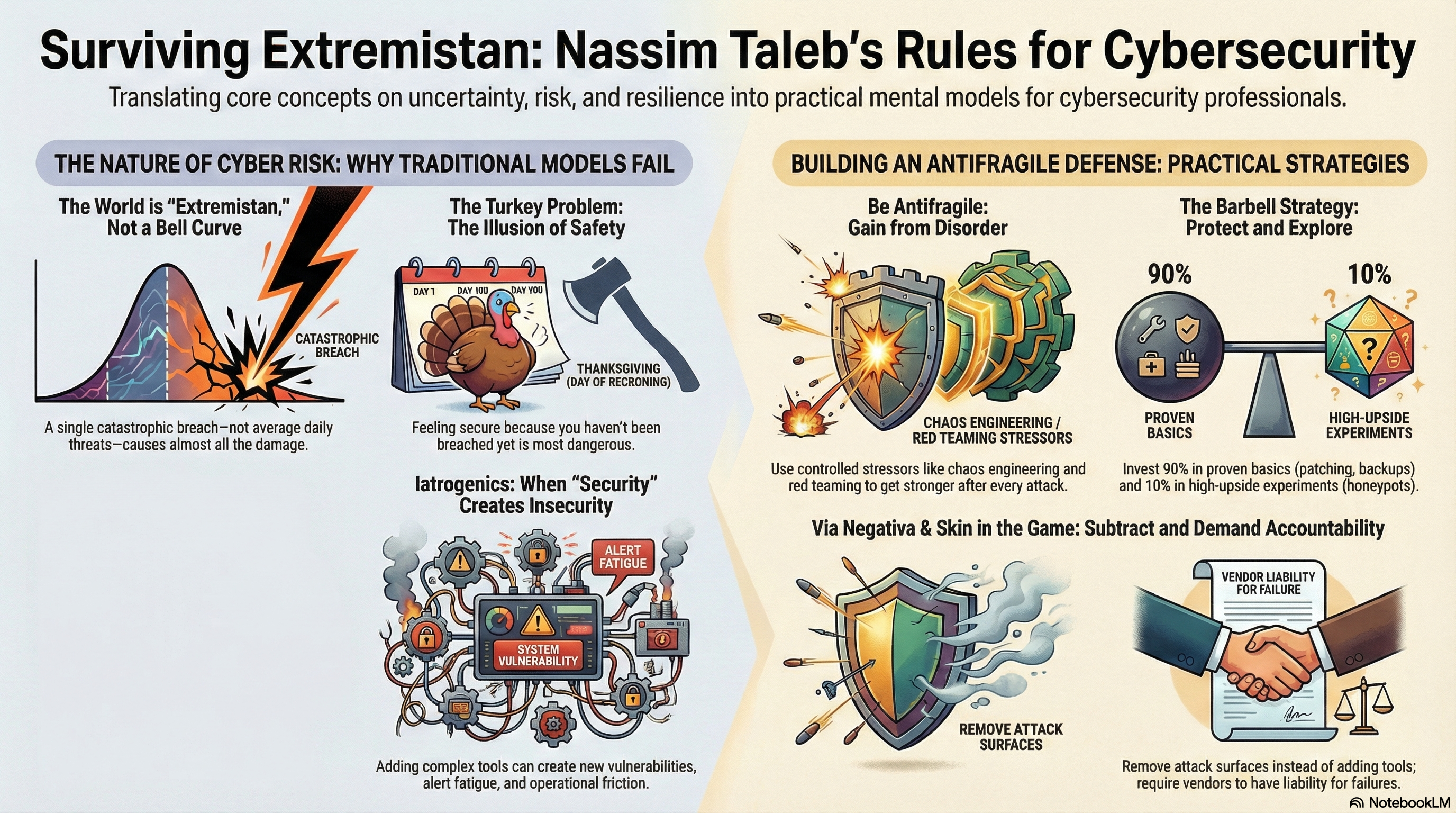

The Structural Problem Most SOCs Ignore

Alert fatigue is usually not a staffing problem.

It’s structural.

Let’s deconstruct it from first principles.

Alert creation =

Detection rule quality × Data fidelity × Context availability × Threshold design

Alert handling =

Triage logic × Skill level × Escalation clarity × Tool ergonomics

Burnout =

Alert volume × Repetition × Low agency × Poor feedback loops

Most organizations optimize alert handling.

Very few optimize alert creation.

That’s why AI copilots layered on top of noisy systems rarely deliver the ROI promised. They help analysts swim faster — but the flood never stops.

Step 1: Do a Real Pareto Analysis (Not a Dashboard Screenshot)

Pull 90 days of alert data.

Per rule (or detection family), calculate:

-

Total alert volume

-

% of total volume

-

Escalations

-

Confirmed incidents

-

Escalation rate (cases ÷ alerts)

-

Incident yield (incidents ÷ alerts)

What you will likely find:

A small subset of rules generate a disproportionate amount of alerts with negligible incident yield.

Those are your leverage points.

A conservative starting threshold I’ve seen work repeatedly:

-

<1% escalation rate

-

Zero confirmed incidents in 6 months

-

Material volume impact

Those rules go into review.

Not deleted immediately. Reviewed.

Step 2: Eliminate Structural Noise

This is where 40–60% reduction becomes realistic.

1. Kill Duplicate Logic

Multiple tools firing on the same behavior.

Multiple rules detecting the same pattern.

Multiple alerts per entity per time window.

Deduplicate at the correlation layer — not just in the UI.

One behavior. One alert. One case.

2. Convert “Spam Rules” into Aggregated Signals

If a vulnerability scanner fires 5,000 times a day, you do not need 5,000 alerts.

You need one:

“Expected scanner activity observed.”

Or, more interestingly:

“Scanner activity observed from non-approved host.”

Aggregation preserves visibility while eliminating interruption.

3. Introduce Tier 0 (Telemetry-Only)

This is the most underused lever in SOC design.

Not every signal deserves to interrupt a human.

Define:

-

T0 – Telemetry only (logged, searchable, no alert)

-

T1 – Grouped alert (one per entity per window)

-

T2 – Analyst interrupt

-

T3 – Auto-containment candidate

Converting low-confidence detections into T0 telemetry can remove massive volume without losing investigative data.

You are not deleting signal.

You are removing interruption.

Step 3: Move Enrichment Before Alert Creation

Most SOCs enrich after alert creation.

That’s backward.

If context changes whether an alert should exist, enrichment belongs before the alert.

Minimum viable enrichment that actually changes triage outcomes:

-

Asset criticality

-

Identity privilege level

-

Known-good infrastructure lists

-

Recent vulnerability context

-

Entity behavior history

Decision sketch:

If high-impact behavior

AND privileged identity or critical asset

AND contextual risk indicators present

→ Create T2 alert

Else if repetitive behavior with incomplete context

→ Grouped T1 alert

Else

→ T0 telemetry

This is where AI can be valuable.

Not as an auto-closer.

As a pre-alert context aggregator and risk scorer.

If AI is applied after alert creation, you are optimizing cost you didn’t need to incur.

Step 4: Establish a Detection “Kill Board”

Rules should be treated like production code.

They have operational cost. They require ownership.

Standing governance model:

-

Detection Lead – rule quality

-

SOC Manager – workflow impact

-

IR Lead – breach risk validation

-

CISO – risk acceptance authority

Decision rubric:

-

Does this rule map to a real, high-impact scenario?

-

Is its incident yield acceptable relative to volume?

-

Would enrichment materially improve precision?

-

Is it duplicative elsewhere?

Rules with zero incident value over defined periods should require justification.

Visibility is not the same as interruption.

Compliance logging can coexist with fewer alerts.

Step 5: Automation — With Guardrails

Automation is not the first lever.

It is the multiplier.

Safe automation patterns:

-

Context enrichment

-

Intelligent routing

-

Alert grouping

-

Reversible containment with approval gates

Dangerous automation patterns:

-

Permanent suppression without expiry

-

Auto-closure without sampling

-

Logic changes without audit trail

Guardrails I consider non-negotiable:

-

Suppression TTL (30–90 days)

-

Random sampling of suppressed alerts (0.5–2%)

-

Quarterly breach-backtesting

-

Full automation decision logging

Noise today can become weak signal tomorrow.

Design for second-order effects.

Why AI Fails in Noisy SOCs

If alert volume doesn’t change, analyst workload doesn’t change.

AI layered on broken workflows becomes a coping mechanism, not a transformation.

The highest ROI AI use case in mature SOCs is:

Pre-alert enrichment + risk scoring.

Not post-alert summarization.

Redesign alert economics first.

Then scale AI.

What 40–60% Reduction Actually Looks Like

In environments with:

-

Default SIEM thresholds

-

Redundant telemetry

-

No escalation-rate filtering

-

No Tier 0

-

No suppression expiry

-

No detection governance loop

A 40–60% alert reduction is directionally achievable without loss of high-severity coverage.

The exact number depends on detection maturity.

The risk comes not from elimination.

The risk comes from elimination without measurement.

Two-Week Quick Start

If you need results before the next KPI review:

-

Export 90 days of alerts.

-

Compute escalation rate per rule.

-

Identify bottom 20% of signal drivers.

-

Convene rule rationalization session.

-

Pilot suppression or grouping with TTL.

-

Publish signal-to-noise ratio as a KPI alongside MTTR.

Shift the conversation from:

“How do we close more alerts?”

To:

“Why does this alert exist?”

The Core Shift

SOC overload is not caused by insufficient analyst effort.

It is caused by incentive systems that reward detection coverage over detection precision.

If your success metric is number of detections deployed, you will generate endless noise.

If your success metric is signal-to-noise ratio, the system corrects itself.

You don’t fix alert fatigue by hiring faster triage.

You fix it by designing alerts to be expensive.

And when alerts are expensive, they become rare.

And when they are rare, they matter.

That’s the design goal.

* AI tools were used as a research assistant for this content, but human moderation and writing are also included. The included images are AI-generated.